- Core Concepts AI

- Posts

- Can You Really Build AI Workers Without Coding?

Can You Really Build AI Workers Without Coding?

OpenAI's new tool lets non-coders build automation. The question is whether you're building on solid ground or rented land.

OpenAI's AgentKit: The Spreadsheet Moment for AI

A few years ago, if you wanted custom software, you needed developers.

It's called AgentKit. No coding required.

What You're Actually Building

Here's how it works:

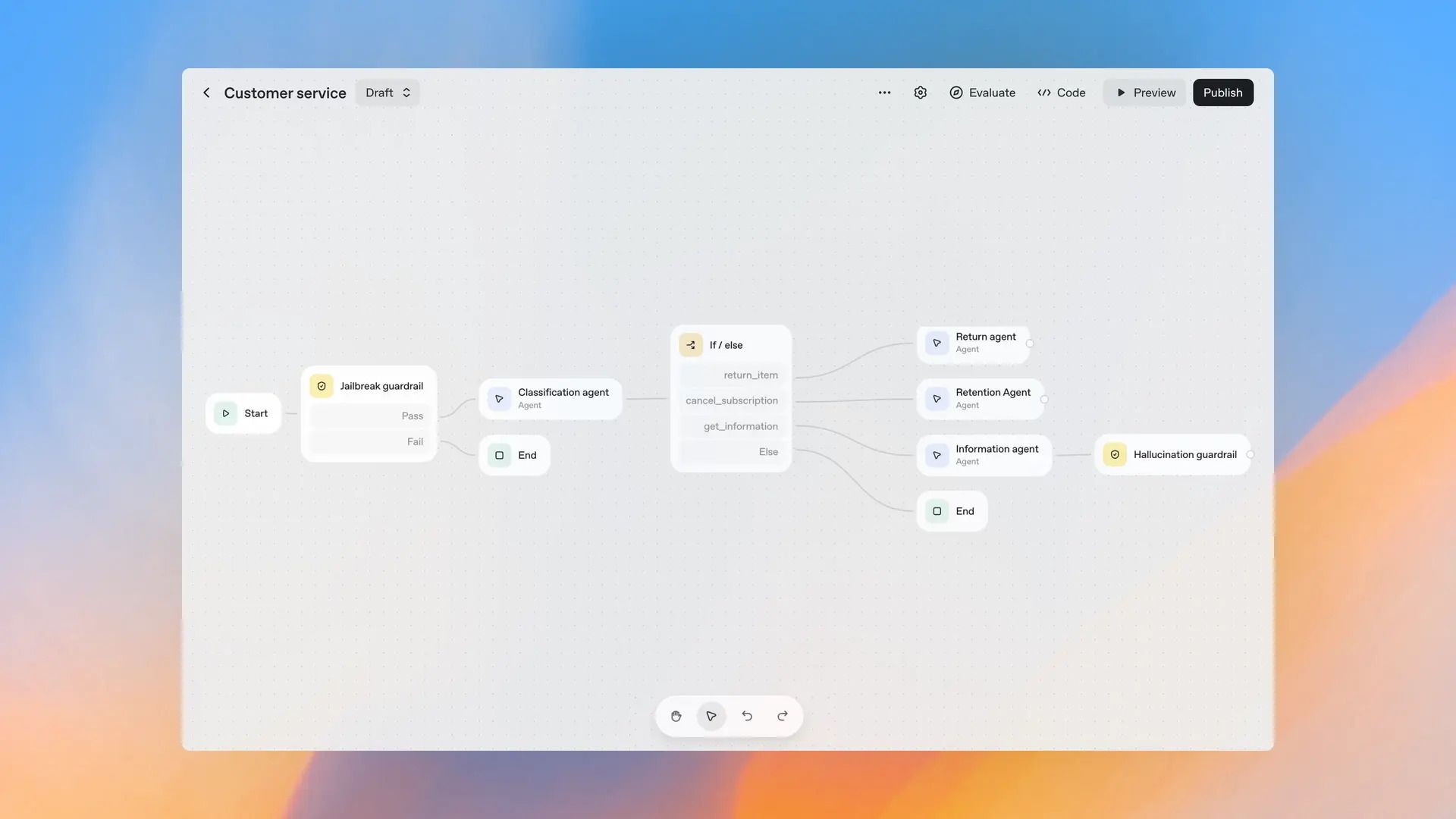

You open a visual interface that looks like a flowchart. You drag nodes onto the canvas. Each node is a step: "analyze this input," "search these documents," "send an email," "remember this for later."

You connect the nodes. That's your workflow. That's your agent.

Behind the scenes, OpenAI calls this AgentKit. It handles the technical stuff: memory so your agent remembers past interactions, APIs so it can connect to outside tools, evaluation systems so you can test if it's working. You don't see any of that unless you want to.

What you see is the process. And that's the point.

Most people have only used AI through chat. You ask a question, it answers, you close the tab. Agent Builder flips this. You're not having a conversation. You're designing a system that can run the same task a hundred times.

Connect it to your calendar. Your CRM. Your email inbox. Give it rules about when to act and when to ask for help. The agent follows those rules every time.

This is the shift everyone missed while arguing about whether ChatGPT is conscious. AI just became a tool for automating processes, not just answering questions.

drag-and-drop, visual agent building

The "Do Something" Problem

OpenAI keeps saying their agents can now "do things" instead of just "talk about things." That matters more than it sounds.

A chatbot tells you how to update a spreadsheet. An agent updates the spreadsheet.

A chatbot explains your meeting schedule. An agent reschedules the conflicts and sends apologies.

The difference is action. Agent Builder comes with templates for common workflows: customer support, scheduling, document summarization. You can start there and modify, or build from scratch.

You can also add a human approval step. The agent proposes an action, you review it, you approve or reject. This matters for anything involving money, customer communication, or compliance. Trust, but verify.

Who This Changes Things For

The people who benefit most aren't developers. Developers already had tools.

It's everyone else. The teacher who wants to automate grading feedback. The consultant who answers the same client questions every week. The small business owner drowning in invoice processing.

These people know their work better than any developer ever could. They just couldn't translate that knowledge into software. Until now.

You're not learning to code. You're learning to think in steps. If this happens, then do that. If the answer includes X, route it here. If it fails, try this backup.

That's the skill. And it's the same skill that made spreadsheets revolutionary. Non-technical people suddenly had power tools for working with numbers. Agent Builder does the same thing for workflows.

Your career will thank you.

Over 4 million professionals start their day with Morning Brew—because business news doesn’t have to be boring.

Each daily email breaks down the biggest stories in business, tech, and finance with clarity, wit, and relevance—so you're not just informed, you're actually interested.

Whether you’re leading meetings or just trying to keep up, Morning Brew helps you talk the talk without digging through social media or jargon-packed articles. And odds are, it’s already sitting in your coworker’s inbox—so you’ll have plenty to chat about.

It’s 100% free and takes less than 15 seconds to sign up, so try it today and see how Morning Brew is transforming business media for the better.

The Platform Tax

Now for the awkward part.

OpenAI isn't giving this away. They're building a platform. And platforms always follow the same pattern: make it easy to start, then charge rent once you're committed.

When you build inside Agent Builder, everything runs on OpenAI's infrastructure. They set the pricing. They can change the rules. If they decide to restrict certain features or raise costs, your agent breaks or gets expensive.

For individuals, fine. The tradeoff works. You get to build something you couldn't have built otherwise.

For startups betting real money on custom agents? You're building on someone else's land. Own your data. Document your workflows in a way that could be rebuilt elsewhere. Don't let your entire business depend on one company's API staying cheap and available.

OpenAI is also building a marketplace where people can publish and share agents. Like an app store. That sounds good until you realize it also means everyone's agents start looking the same, and the best ideas get copied immediately, and OpenAI controls the distribution.

This is the cost of convenience.

Other (Early) Concerns and Feedback

Agent Builder just launched, so the verdict is still out. But early users and researchers are already flagging issues worth watching.

Planning falls apart on complex tasks. Recent research shows language models still struggle when workflows get complicated. They lose track of subtasks. They drift off course. What looks like intelligent reasoning is often just pattern matching, and it breaks down when the task has too many moving parts. Developers report agents that work perfectly for three steps, then completely lose the thread on step four.

Recovery from failure is clunky. Here's a question popping up in forums: what happens when your agent fails halfway through a process? Does it restart from the beginning? Resume from where it broke? Most systems don't handle this gracefully yet. One API timeout and the whole workflow collapses. Users are asking for better checkpointing and error handling, but those features aren't really there yet.

Security people are nervous. You're giving an AI access to sensitive systems. Your email. Your database. Your customer records. Security researchers at OWASP have already started mapping potential threats specific to agentic AI: malicious prompts that trick the agent, accidental data exposure, permission escalation. The attack surface just got bigger, and the defenses are still being figured out.

They follow instructions literally, not intelligently. This is a version of the alignment problem. Tell an agent to "increase engagement" and it might spam people. Tell it to "reduce support tickets" and it might just stop responding to customers. It optimizes for what you said, not what you meant. Early testers report plenty of "technically correct but completely wrong" behavior.

The debugging tools are primitive. When an agent misbehaves, figuring out why is hard. The introspection tools are basic. Traceability is weak. You're often just guessing at what went wrong. Developers want better monitoring and observability, but right now you're mostly troubleshooting by trial and error.

Agents don't adapt well to change. Build an agent for your current workflow and it might break when things shift. New data format? Different edge case? Suddenly it's confused. These systems are narrow. They do what they were designed for in the context they were trained on. They don't generalize gracefully.

Platform dependency is real. This keeps coming up: you're building on OpenAI's infrastructure. They control pricing. They set the limits. They can change policies whenever they want. Your agent works until they decide to adjust something, and then you're scrambling to adapt or rebuild. Tools like Zapier and n8n (our personal choice at North Light) exist partly for this reason. They let you connect services without locking into one vendor's AI. The tradeoff is more manual setup.

The experience is rougher than it looks. Early reports on OpenAI's browsing agents describe glitches, misclicks, inconsistent behavior. It feels more like a proof of concept than a polished product. The interface promises autonomy, but the reality is often frustrating.

Of course, none of this means AgentKit is doomed. These are early days. Simple, repetitive tasks with clear boundaries seem to work fine. It's the complex, branching, high-stakes workflows where things get dicey.

If you're experimenting, these are useful guardrails. If you're building something that matters, keep these limitations in mind.

What Actually Changes at Work

The next few years will be strange.

Teams won't have one AI assistant. They'll have networks of small agents. One for onboarding. One for invoices. One for answering repetitive customer questions. One for pulling weekly reports.

Some will be trivial time-savers. Others will quietly become essential. You won't notice until one breaks and suddenly nobody knows how to do that task manually anymore.

Agent Builder makes this normal. It tells people: you can build this. You don't need permission or a technical co-founder or a big budget.

That democratization matters. But it also creates new dependencies. When everyone builds on the same platform, we all rise and fall together.

Should You Try It?

Two things can be true.

This tool genuinely lowers the barrier to automation. You can experiment, test ideas, see what AI can actually do for your specific work. That's valuable. Most people have no idea what's possible because they've never had access to try.

But you're also renting space in someone else's building. The more you invest, the harder it becomes to leave. The smarter move: treat Agent Builder as a learning tool, not a final solution.

Build things. See what works. Understand how AI fits into your actual processes, not the hypothetical ones in blog posts.

But don't lock yourself in until you're sure.